Google Algorithm History

What exactly are Google Algorithms?

Google's algorithms are a complex system that retrieves data from its search index and delivers the best possible results for a query in real time. On its search engine results pages, the search engine uses a combination of algorithms and numerous ranking factors to deliver webpages ranked by relevance. When compared to low-ranking business websites, those with high rankings made more profits and connections. Webmasters and website owners began to use cheap and low-cost tactics to boost website rankings, resulting in low-quality or spam-content websites. The users benefited greatly from this. Google, whose main priority is user trust, formed a team to implement measures for good results and to maintain website quality. As a result, Google developed a number of algorithms.

Panda

From : February 24, 2011

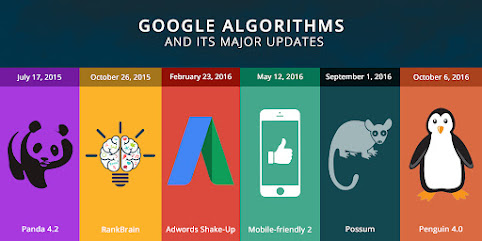

The Panda algorithm update assigns a "quality score" to web pages. Following that, the score is used as a ranking factor. Panda had a minor impact at first, but it was permanently included in Google's core algorithm in January 2016. Since then, the frequency of update rollouts has increased, resulting in faster Panda penalties and recoveries.

This update was based on Content Spamming. Some examples of content spam are:

- Content Duplication is the act of copying content from other websites.

- Quality Less Websites - Low quality websites or websites with grammatical and spelling errors

- Thin Webpages - Websites with a limited amount of content

- Keyword stuffing is the excessive use of keywords in a webpage for the sole purpose of ranking.

- Content Spinning is the process of rearranging the same content on different pages. The Panda algorithm was a huge success in terms of avoiding spam content. On May 19, 2014, the Panda 4.0 version was released, which became a permanent filter in Google.

Penguin

From : April 24, 2012

Google Penguin's goal is to severely punish websites that have artificial backlinks. After this upgrade, low-effort link building, such as purchasing links from link farms and PBNs, was no longer possible

Google introduced the Penguin algorithm update in 2012, which is based on link spamming. This comprises

- Paid Link - In this case, the link is purchased from a link seller.

- Link Exchange - The exchange of links between websites.

- Link Scheme - Programs or applications that automatically create links.

- Comment Spamming is the practice of sharing links in a website's comments.

- Wiki Spamming occurs when a volunteer logs into Wikipedia and adds a link to it.

- Penguin Algorithms also prevent link farming, link automation, low quality links, excessive use of internal links, excessive use of anchor text, and guest blogging manipulation. Penguin 4.0 was released in 2016, and it penalizes websites that use link spam in real time.

Pigeon

From : july 24,2014

The goal of this algorithm change was to produce more useful, relevant, and accurate local search results that were more closely related to typical online search ranking factors. According to Google, this new algorithm improves their distance and geographical ranking metrics.

After nearly two years, Google modified its Penguin algorithm for the final time. First, Penguin was integrated into Google's core search algorithm, which meant that data was constantly refreshed. Penguins have also become more granular, which may affect ranking for specific pages, sections of a site, or the entire area.

Humming Bird

The Google Humming Bird Algorithm was introduced in 2013. It was based on the results of a semantic search. This provides detailed information about a website. By indexing the related topics, interactive questions will be answered. Feedback is used to rank semantic search results. The disadvantage of this algorithm is that it will list incorrect feedback in the results even if it is incorrect.

Rankbrain Algorithm

Google introduced the RANKBRAIN algorithm in 2015, which is considered an update or second part of the HUMMING BIRD Algorithm. For tracking user interactions, this algorithm employs Artificial Intelligence. A computer or system takes on the role of a human being. Search results are generated automatically.

Mobile Geddon

In 2015, Mobile Geddon is a mobile-friendly update. As more people began to use the internet on their smartphones, Google began to prioritise mobile-friendly websites and began to adjust its ranking accordingly. A mobile-friendly website can be read without zooming or tapping; there should be no unplayable content, no horizontal scrolling, and tap targets should be appropriately spaced. Google allowed high content webpages to rank for one year, but after introducing the Mobile Friendly 2.0 version on May 12, 2016, Google strictly followed this algorithm.

Park Domain Update

If a domain is available, we can book it without having to create a webpage. These domains are known as Park Domains. The webpages will have no content, but advertisements may be included. It will be indexed by Google. Because these park domains are inconvenient for users, Google introduced the Park Domain update.

EMD ( exact match domain)

The EMD update was released on September 28th, 2012. Using our focusing keywords, we can create a domain name. However, if a website is inactive for an extended period of time, Google will delist it.

Pirate update

If a website's contents, images, or videos are copied to another website without the webmaster's knowledge, this can be reported to Google, and action will be taken against the perpetrator under the D.M.C. Act (Digital Millennium Copyright) of 1998.

BERT (Bidirectional Encoder Represtantion from Tranformer )

The December BERT update was introduced in 2020. This is an update for local or natural language processing. It was first released in English, and then in seven other languages, including Hindi and Arabic.

Comments

Post a Comment